Those who spill ink about the authenticity of Google’s video on Gemini’s multimodal performance or its performance compared to GPT4 are missing the true revolution inside Google announcement.

The market is today dominated by large (very large) LLMs. From Mistral to GPT4, including LlaMa 2, all these models whose performance is regularly compared to different benchmarks are not adapted to work correctly on smartphones.

However, Google’s Gemini model also offers a Gemini Nano version with 1.8 or 3.25 billion parameters designed to work on Android terminals. (As a reminder, Mistral 7B or LlaMa 2 offer versions for at least 7 billion.)

What must be understood here is the paradigm shift in Generative AI capabilities which were currently reserved for the cloud and which are now accessible directly in mobile terminals.

Gemini, small models and high performances

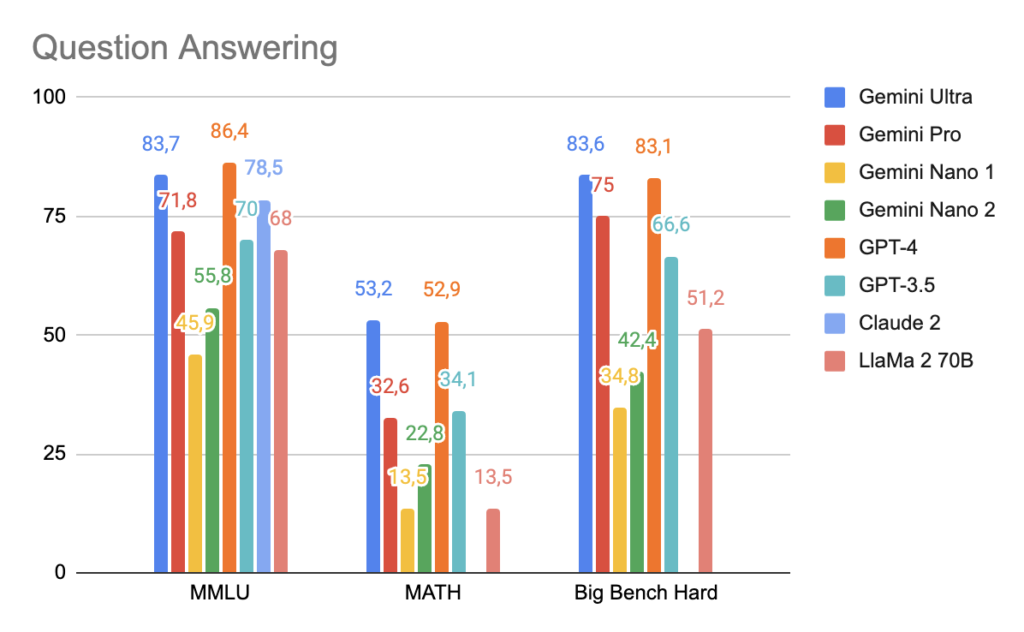

Let’s compare the capabilities of Gemini Nano on different tasks, keeping in mind that it is capable of running sufficiently quickly on a smartphone while the others require large GPUs to achieve correct inference latency.

On questioning and reasoning tasks, the Gemini Nano models remain less precise than their big brothers.

The performance of Nano models falls short of other models because they are smaller. However, the performance of Gemini Nano 2 (3.25B) is only 10 points below LlaMa 2, or even surpasses it even though the latter is 20x larger!

To understand the true value of Gemini Nano models, we need to look at the tasks of machine translation, image understanding and voice recognition.

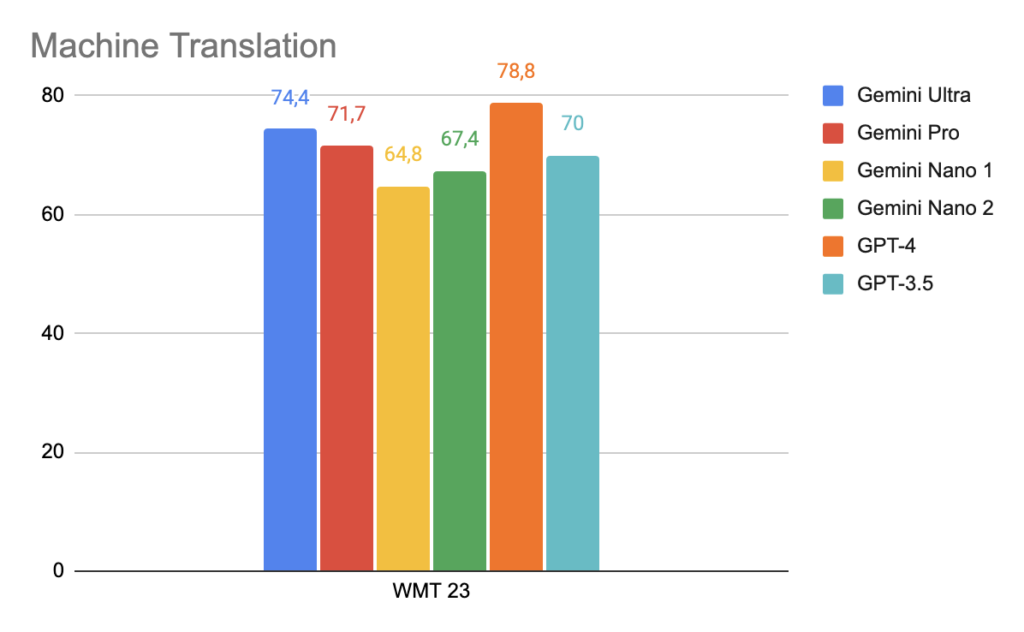

Machine Translation

In multilingual translation, Gemini Nano models are only 10 points behind GPT-4

Knowing that smartphones are already widely used as translators, the possibility of having a model capable of translating locally will improve latency and above all reduce Cloud bills!

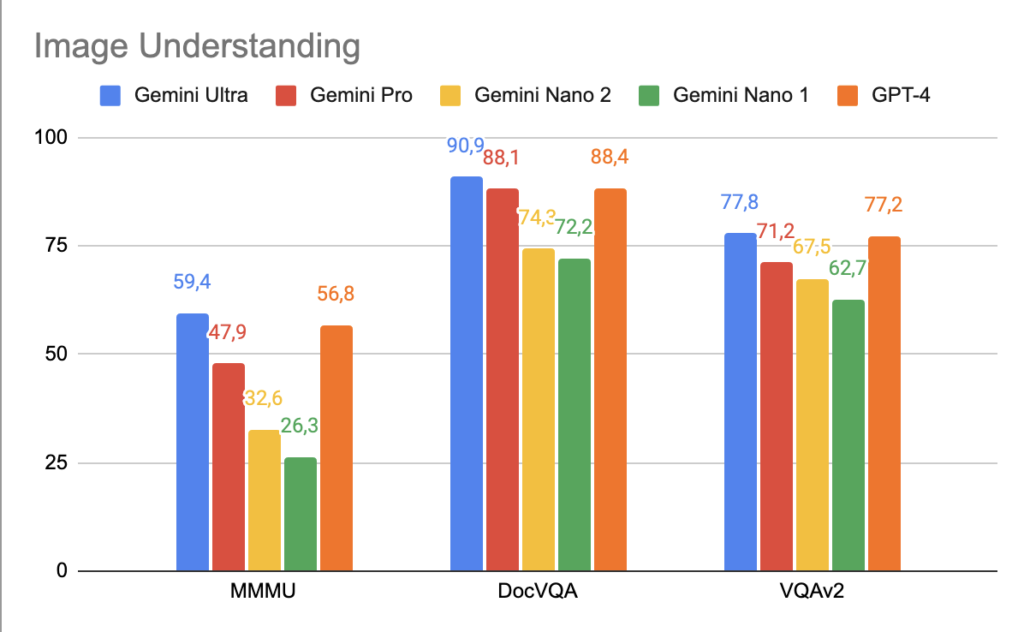

Image Understanding

The image understanding capabilities of Gemini Nano models are on average only 10 points behind other models.

The possibilities offered by image understanding directly on smartphones will open up a whole new range of applications.

We can think in particular of applications like Be Me Eyes which helps visually impaired people by describing the objects they take photos of.

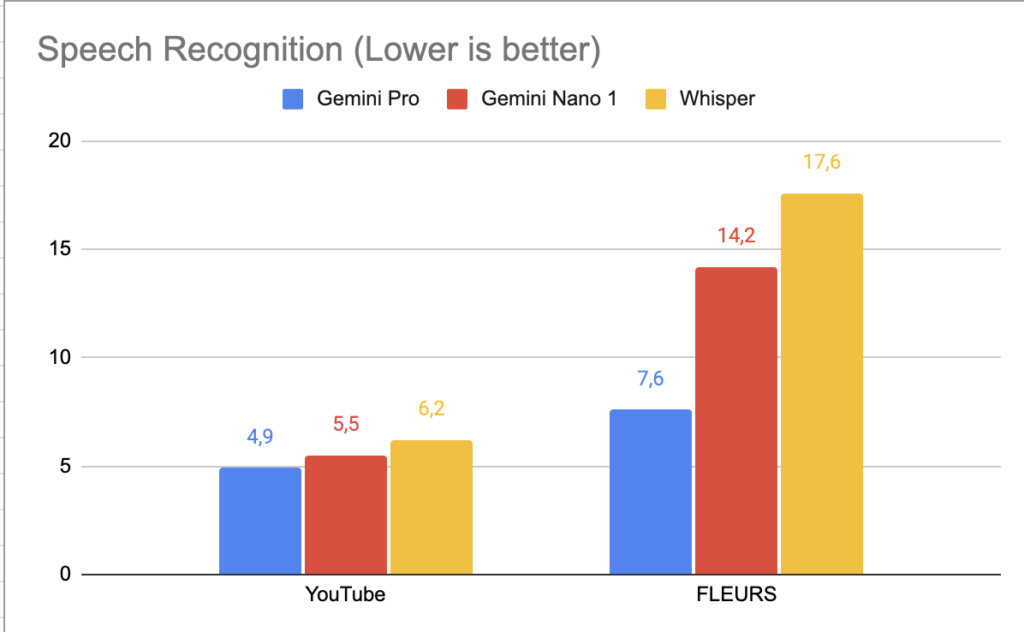

Speech Recognition

On voice recognition, Gemini Nano does even better than the latest version of Whisper which was the best model until now.

Conclusion

The Gemini Nano models are the real revolution in the world of GenAI which was until now dominated by very large models designed for the Cloud.

With their 32,000 context tokens and their true multimodal possibilities, the models of the Gemini family are an indisputable innovation.

A whole new range of applications will be made possible from December because a deployment of Gemini Nano is planned for Google Pixel 8 smartphones.

Here again it is an innovation that will benefit the Android ecosystem while Apple remains for the moment far behind innovations in Generative AI.

Chart sources are available in the Gemini technical article